Jan 24, 2026Luke

Careti GLM-4.7-Flash Local Run & On-Premise Usage Guide

A demonstration of running GLM-4.7-Flash locally via Ollama on an RTX 3090 and an update on the Thinking UI. Check out use cases for on-premise environments to solve security and cost issues.

With the recent interest in the GLM-4.7-Flash-Latest model, we have received many inquiries about Careti's support for local LLMs and on-premise environments. We have prepared a video demonstrating the actual operation.

1. Why GLM-4.7-Flash?

GLM-4.7-Flash is a leader in the 30B class, a new lightweight deployment option (30B-A3B MoE) that balances performance and efficiency. It demonstrates overwhelming performance compared to other models, especially in coding and reasoning benchmarks.

| Benchmark | GLM-4.7-Flash | Qwen3-30B-Thinking | GPT-OSS-20B |

|---|---|---|---|

| AIME 25 | 91.6 | 85.0 | 91.7 |

| GPQA | 75.2 | 73.4 | 71.5 |

| SWE-bench (Verified) | 59.2 | 22.0 | 34.0 |

| τ²-Bench | 79.5 | 49.0 | 47.7 |

| BrowseComp | 42.8 | 2.29 | 28.3 |

| LCB v6 | 64.0 | 66.0 | 61.0 |

| HLE | 14.4 | 9.8 | 10.9 |

In particular, it recorded a score of 59.2 on SWE-bench Verified, which evaluates practical coding skills, significantly outpacing competing models. This suggests that it is possible to run commercial API-level coding agents even in a local environment.

2. Serve GLM-4.7-Flash Locally

GLM-4.7-Flash can be deployed locally via vLLM and SGLang inference frameworks. Currently, both frameworks support it in their main branches. Detailed deployment instructions can be found in the official GitHub repository (zai-org/GLM-4.5).

3. Utilization in Local and On-Premise Environments

This video assumes an environment where using external APIs is difficult due to security or cost issues, and captures the process of running a local model via Ollama on a single RTX 3090 environment.

- Task Performed: A practical scenario test evaluating a markdown viewer editor development plan document and deriving follow-up tasks.

- Test Results: We confirmed that document analysis and development guide writing proceeded smoothly without a cloud connection.

4. Careti Update: Thinking UI Application

The slowness felt when integrating with Ollama in the currently deployed version was because the model's reasoning (Thinking) process was not visible to the user.

- Patch Plan: The Careti version used in the video is a future version patched to expose the Thinking process.

- Improvement: Users can check the process of the model organizing logic internally in real-time, making it easier to grasp the workflow and improving the perceived waiting time.

5. Expected Performance by Hardware (RTX 3090 vs 5090)

The operation speed of the RTX 3090 in the video may feel somewhat slow, but this is due to the limitations of the hardware's memory bandwidth. The performance difference expected when introducing the RTX 5090 in the future is as follows:

| Category | RTX 3090 (Current Video) | RTX 5090 (Expected) |

|---|---|---|

| Inference Speed (TPS) | Approx. 80 ~ 100 TPS | Approx. 180 ~ 220 TPS |

| Memory Bandwidth | 936 GB/s | 1,792 GB/s or more |

| VRAM Capacity | 24GB | 32GB |

When using the RTX 5090, a speed improvement of about 2x or more is expected due to the increased bandwidth, and the increased VRAM makes it advantageous for processing longer source code at once.

We hope this provides practical data for those considering a local AI development environment.

More posts

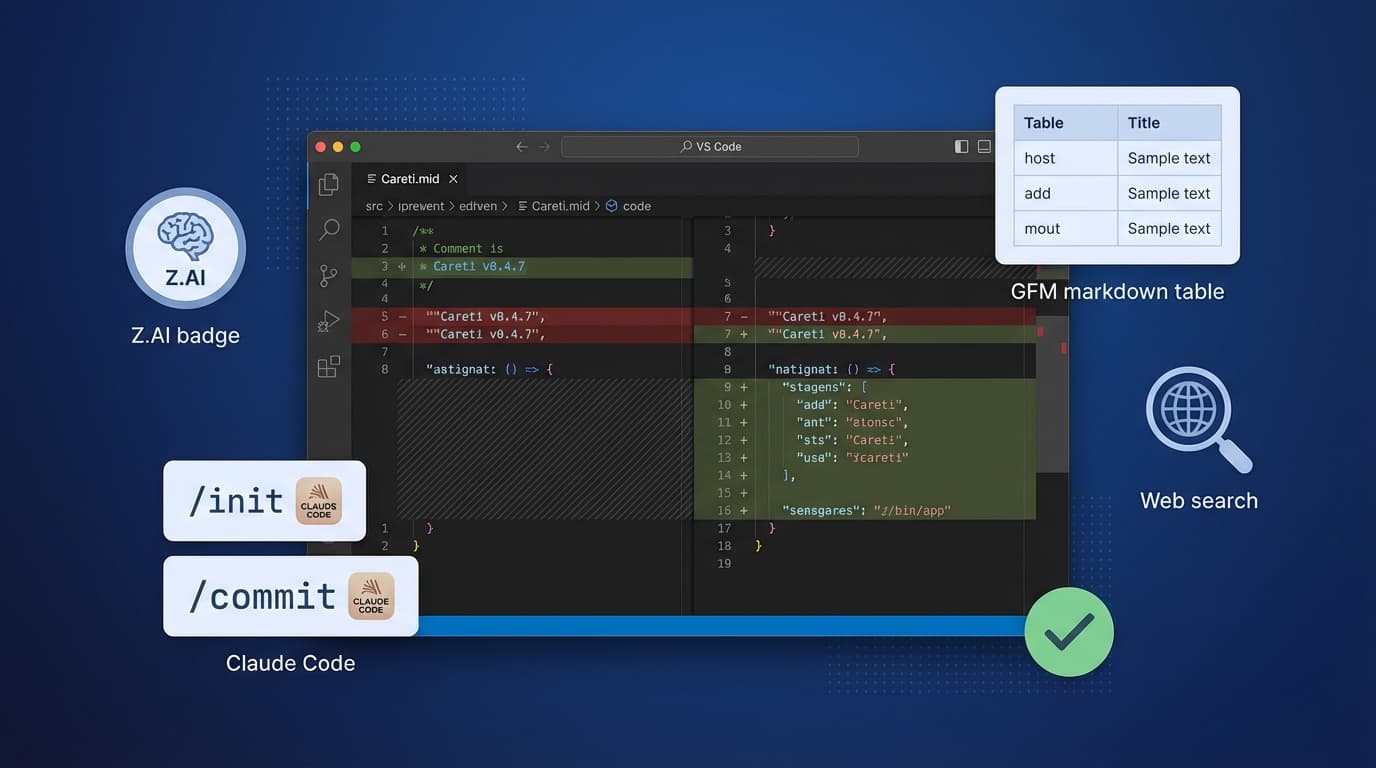

Careti v0.4.7 adds Z.AI GLM-4.7 model, Claude Code compatible command system, SmartEditEngine improvements, and UI enhancements.

Learn how to generate tarot card images using Upstage Solar2 model and HWP document reading in K-Cursor Careti. Discover Careti's unique document processing capabilities and image generation workflow as a sovereign AI solution.